Pyramid Flow: Your Complete Guide to Open-Source AI Video Generation (2025 Edition)

Stepping into 2025, I can’t help but get excited about the latest breakthroughs in AI video generation technology. Sure, we’ve all seen those mind-blowing AI-generated images popping up everywhere — you know, the ones from MidJourney and Stable Diffusion that flood our social media feeds. But here’s what’s got me absolutely buzzing: AI video generation, specifically an incredible open-source tool called Pyramid Flow.

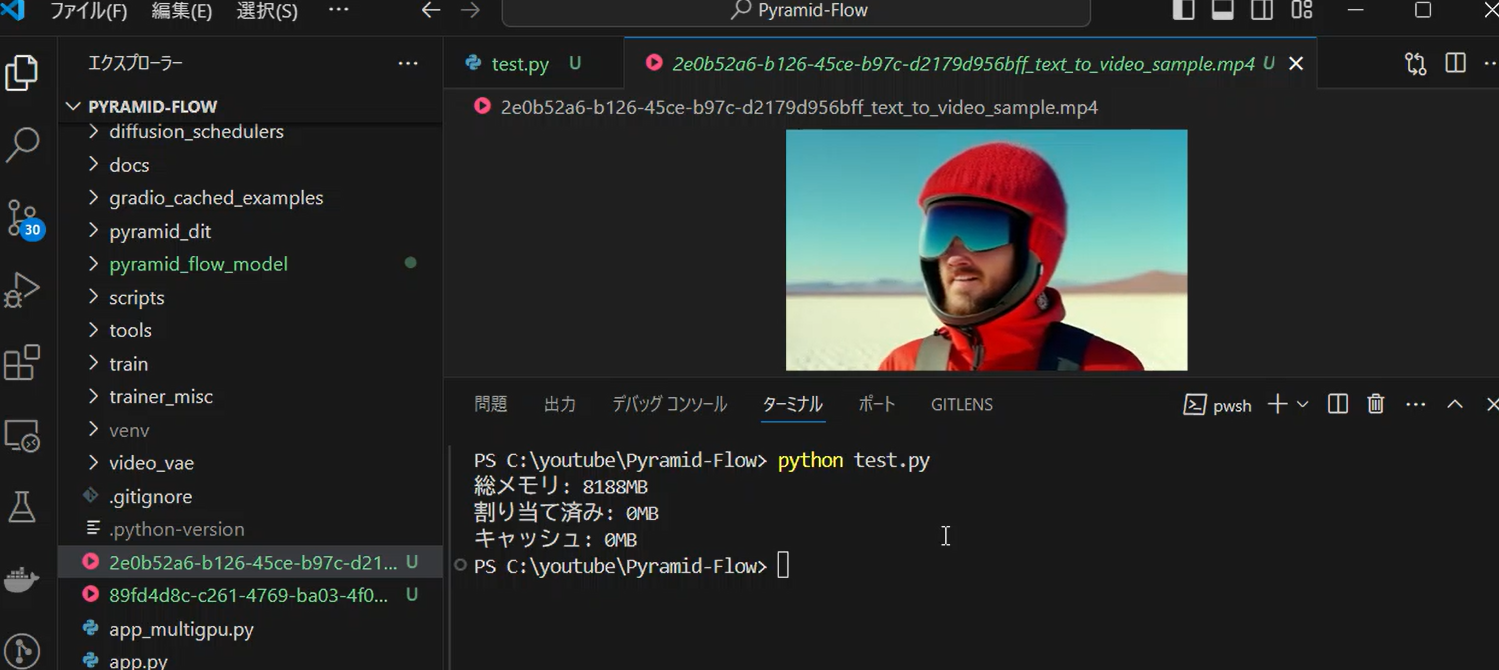

I recently dove deep into this game-changing tool, and I’ve got to tell you, it’s pretty wild. You literally type in what you want to see, hit enter, and boom — you’ve got a video. Even better? You can take your favorite photos and watch them come alive with movement. Trust me, the first time I saw it happen, I had to pick my jaw up off the floor.

Here’s what really sold me on Pyramid Flow: you don’t need to be some coding wizard to use it. They’ve built this super clean browser interface that makes the whole process feel as simple as posting on social media. Of course, you’ll need to do some initial setup (more on that in a bit), but once you’re in, it’s surprisingly straightforward.

Important Update (2025): Pyramid Flow is now accepted for presentation at ICLR 2025 and has undergone significant improvements with the introduction of the miniFLUX architecture, which offers better human structure representation and motion stability compared to the original SD3-based models.

In this post, I’m going to walk you through my hands-on experience with Pyramid Flow, including the latest updates and model variants. Whether you’re a content creator looking to level up your game or just someone who loves playing with cutting-edge tech, you’re in for a treat.

What’s Pyramid Flow? (And Why It’s Revolutionary)

Remember when turning your ideas into videos meant spending hours learning complex editing software? Those days are over. Pyramid Flow is this incredible open-source project that takes either your written descriptions or static images and transforms them into smooth, flowing videos. Built on a revolutionary technique called Pyramidal Flow Matching, it’s designed to compete directly with proprietary systems like OpenAI’s Sora — but with one crucial difference: it’s completely open to the public.

The technology uses a sophisticated pyramidal structure that progressively refines video generation through multiple stages, only applying full resolution at the final stage. This approach dramatically reduces computational requirements while maintaining exceptional quality. We’re talking professional-quality videos here — up to 10 seconds long at 768p resolution running at a smooth 24 frames per second.

The project repository is available at https://github.com/jy0205/Pyramid-Flow, and you can explore the project page at https://pyramid-flow.github.io/.

Key Features of Pyramid Flow:

- Multiple Model Variants: Choose between miniFLUX (supports 1024p images, 384p and 768p video) and SD3-based models (supports 768p and 384p video)

- Extended Video Duration: Generate 5-second videos at 384p or up to 10-second videos at 768p

- Image-to-Video Conversion: Transform still images into dynamic scenes

- Memory Efficient: CPU offloading and multi-GPU support for systems with limited VRAM

- Training Efficiency: Achieved high-quality results with only 20.7k A100 GPU training hours

Before We Dive In: System Requirements (Updated)

Before we start creating AI videos, let’s make sure you’ve got everything set up properly. The requirements have been standardized since the initial release:

Hardware Requirements:

- Minimum: NVIDIA GPU with 8GB VRAM (with CPU offloading enabled)

- Recommended: NVIDIA GPU with 16GB+ VRAM for smoother performance

- Multi-GPU Support: Can leverage 2 or 4 GPUs for faster generation

Software Requirements:

- Operating System: Windows, Linux, or macOS (Apple Silicon supported)

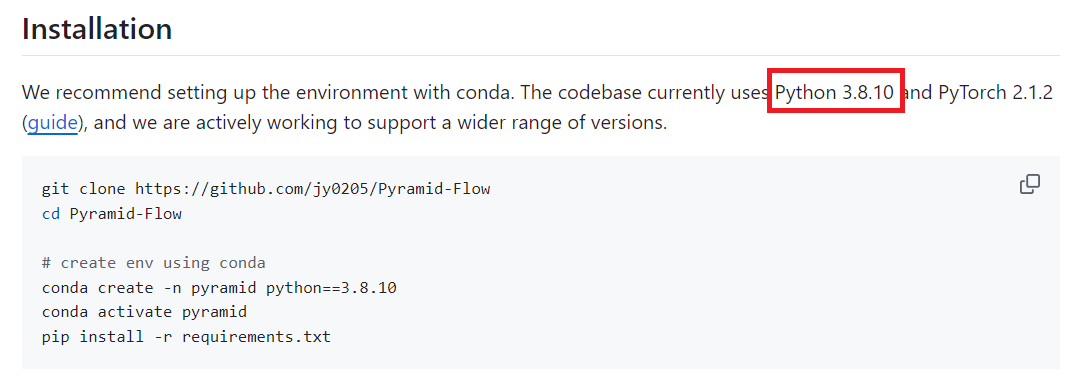

- Python: Version 3.8.10 (specific version required for compatibility)

- PyTorch: Version 2.1.2 (specified in requirements)

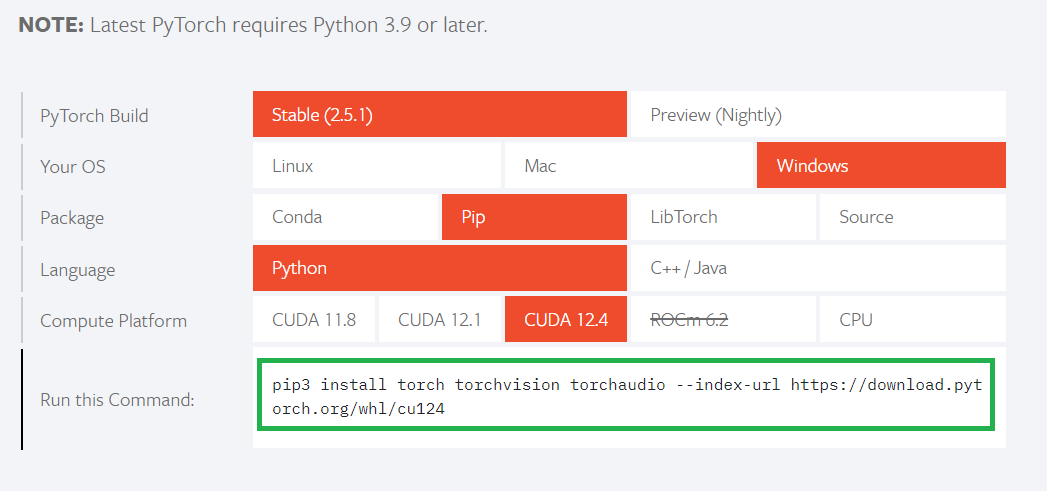

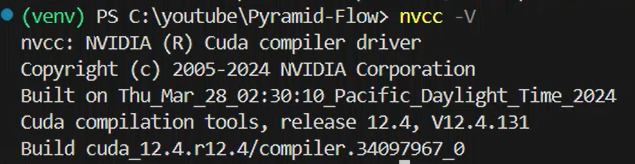

- CUDA: Compatible with CUDA 11.8, 12.1, or 12.4 (depending on PyTorch installation)

- Code Editor: Visual Studio Code (recommended) or any text editor

Think of this setup like building a workshop — we need the right tools before we can start creating. VSCode will be our workbench, Python our toolset, and that NVIDIA graphics card? That’s our power tool that’ll do the heavy lifting.

Setting Up Your Python Environment

Step 1: Install Python 3.8.10

The project specifically requires Python 3.8.10 for optimal compatibility. You have several options:

Option 1: Direct Installation

Download Python 3.8.10 directly from python.org. This is the simplest approach if you don’t need multiple Python versions.

Option 2: Using Conda (Recommended)

If you’re using Anaconda or Miniconda:

conda create -n pyramid python==3.8.10

conda activate pyramid

Option 3: Using pyenv

For advanced users managing multiple Python versions:

pyenv install 3.8.10

pyenv local 3.8.10

Always verify your Python version after installation:

python --version

# Should output: Python 3.8.10

VSCode Tip: If using VSCode, completely close and restart the application after changing Python versions for the changes to take effect.

Getting the Project Files

Cloning the Repository

Use Git to clone the official repository:

git clone https://github.com/jy0205/Pyramid-Flow

cd Pyramid-Flow

Alternative: If you don’t have Git installed, you can download the ZIP file directly from GitHub’s green “Code” button.

Installing Dependencies

Step 1: Create a Virtual Environment

python -m venv venv

# On Windows:

venv\Scripts\activate

# On Linux/Mac:

source venv/bin/activate

You should see (venv) in your terminal prompt, indicating the virtual environment is active.

Step 2: Upgrade pip and Install Requirements

python -m pip install --upgrade pip

pip install -r requirements.txt

The requirements.txt includes all necessary packages with specific versions:

- torch==2.1.2

- torchvision==0.16.2

- transformers==4.39.3

- accelerate==0.30.0

- diffusers>=0.30.1

- And many others…

Downloading Model Checkpoints

New Model Options (2025)

Pyramid Flow now offers two main model architectures:

1. MiniFLUX Models (Recommended for Better Quality)

The newer miniFLUX models show significant improvements in human structure and motion stability:

from huggingface_hub import snapshot_download

model_path = './models' # Your chosen directory

snapshot_download("rain1011/pyramid-flow-miniflux",

local_dir=model_path,

local_dir_use_symlinks=False,

repo_type='model')

MiniFLUX Capabilities:

- 1024p image generation

- 384p video generation (5 seconds)

- 768p video generation (10 seconds)

2. SD3-Based Models (Original)

For those preferring the original architecture:

snapshot_download("rain1011/pyramid-flow-sd3",

local_dir=model_path,

local_dir_use_symlinks=False,

repo_type='model')

SD3 Capabilities:

- 768p video generation (10 seconds)

- 384p video generation (5 seconds)

Launching the Application

Basic Launch

After downloading the models, update the model path in app.py (around line 36) to point to your downloaded models, then:

python app.py

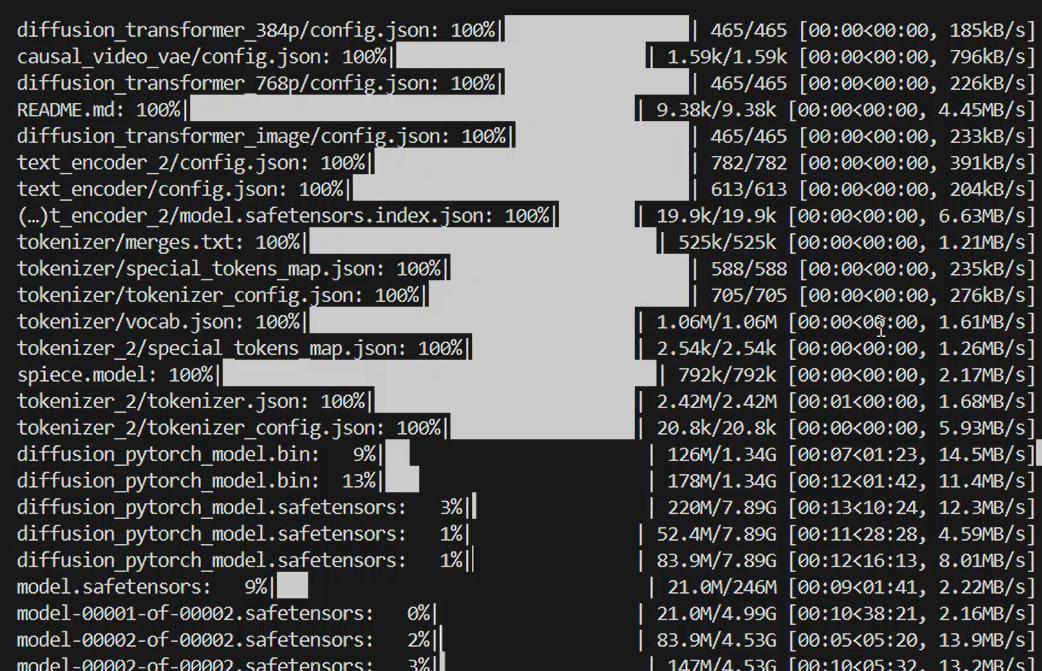

What to Expect on First Launch

diffusion_transformer_768p/config.json: 100%|█████████████████| 465/465 [00:00<00:00, 226kB/s]

README.md: 100%|█████████████████████████████████████████| 9.38k/9.38k [00:00<00:00, 4.45MB/s]

diffusion_transformer_image/config.json: 100%|████████████████| 465/465 [00:00<00:00, 233kB/s]

text_encoder_2/config.json: 100%|█████████████████████████████| 782/782 [00:00<00:00, 391kB/s]

text_encoder/config.json: 100%|███████████████████████████████| 613/613 [00:00<00:00, 204kB/s]

(…)t_encoder_2/model.safetensors.index.json: 100%|███████| 19.9k/19.9k [00:00<00:00, 6.63MB/s]

tokenizer/merges.txt: 100%|████████████████████████████████| 525k/525k [00:00<00:00, 1.21MB/s]

tokenizer/special_tokens_map.json: 100%|██████████████████████| 588/588 [00:00<00:00, 235kB/s]

tokenizer/tokenizer_config.json: 100%|████████████████████████| 705/705 [00:00<00:00, 276kB/s]

tokenizer/vocab.json: 100%|██████████████████████████████| 1.06M/1.06M [00:00<00:00, 1.61MB/s]

tokenizer_2/special_tokens_map.json: 100%|███████████████| 2.54k/2.54k [00:00<00:00, 1.26MB/s]

spiece.model: 100%|████████████████████████████████████████| 792k/792k [00:00<00:00, 2.17MB/s]

tokenizer_2/tokenizer.json: 100%|████████████████████████| 2.42M/2.42M [00:01<00:00, 1.68MB/s]

tokenizer_2/tokenizer_config.json: 100%|█████████████████| 20.8k/20.8k [00:00<00:00, 5.93MB/s]

model.safetensors: 100%|███████████████████████████████████| 246M/246M [00:49<00:00, 5.00MB/s]

diffusion_pytorch_model.bin: 100%|███████████████████████| 1.34G/1.34G [02:03<00:00, 10.9MB/s]

Fetching 24 files: 17%|██████▌ | 4/24 [02:03<12:53, 38.69s/it]

diffusion_pytorch_model.safetensors: 18%|██▋ | 1.38G/7.89G [02:02<12:30, 8.66MB/s]

diffusion_pytorch_model.safetensors: 40%|██████ | 3.16G/7.89G [04:16<04:17, 18.4MB/s]

diffusion_pytorch_model.safetensors: 32%|████▊ | 2.53G/7.89G [04:16<05:16, 16.9MB/s]

diffusion_pytorch_model.safetensors: 32%|████▊ | 2.55G/7.89G [04:15<15:01, 5.92MB/s]

model-00001-of-00002.safetensors: 29%|█████▏ | 1.43G/4.99G [02:01<03:59, 14.9MB/s]

model-00001-of-00002.safetensors: 64%|███████████▌ | 3.22G/4.99G [04:15<02:12, 13.4MB/s]

model-00002-of-00002.safetensors: 27%|████▉ | 1.24G/4.53G [01:59<06:22, 8.62MB/s]

model-00002-of-00002.safetensors: 59%|██████████▋ | 2.69G/4.53G [04:14<03:21, 9.12MB/s]

The application will:

- Load the model checkpoints (this may take a minute)

- Start the Gradio web interface

- Automatically open your browser to

http://localhost:7860

GPU Configuration

Checking CUDA Availability

First, verify your CUDA version:

nvcc -V

Installing PyTorch with CUDA Support

For CUDA 12.4 (most common in 2025):

# CUDA 12.1 binaries are compatible with CUDA 12.4

pip install torch==2.1.2 torchvision==0.16.2 torchaudio --index-url https://download.pytorch.org/whl/cu121

For CUDA 11.8:

pip install torch==2.1.2 torchvision==0.16.2 torchaudio --index-url https://download.pytorch.org/whl/cu118

Note: PyTorch CUDA 12.1 binaries work with CUDA 12.2, 12.3, and 12.4 installations.

Using Pyramid Flow: Interface & Features

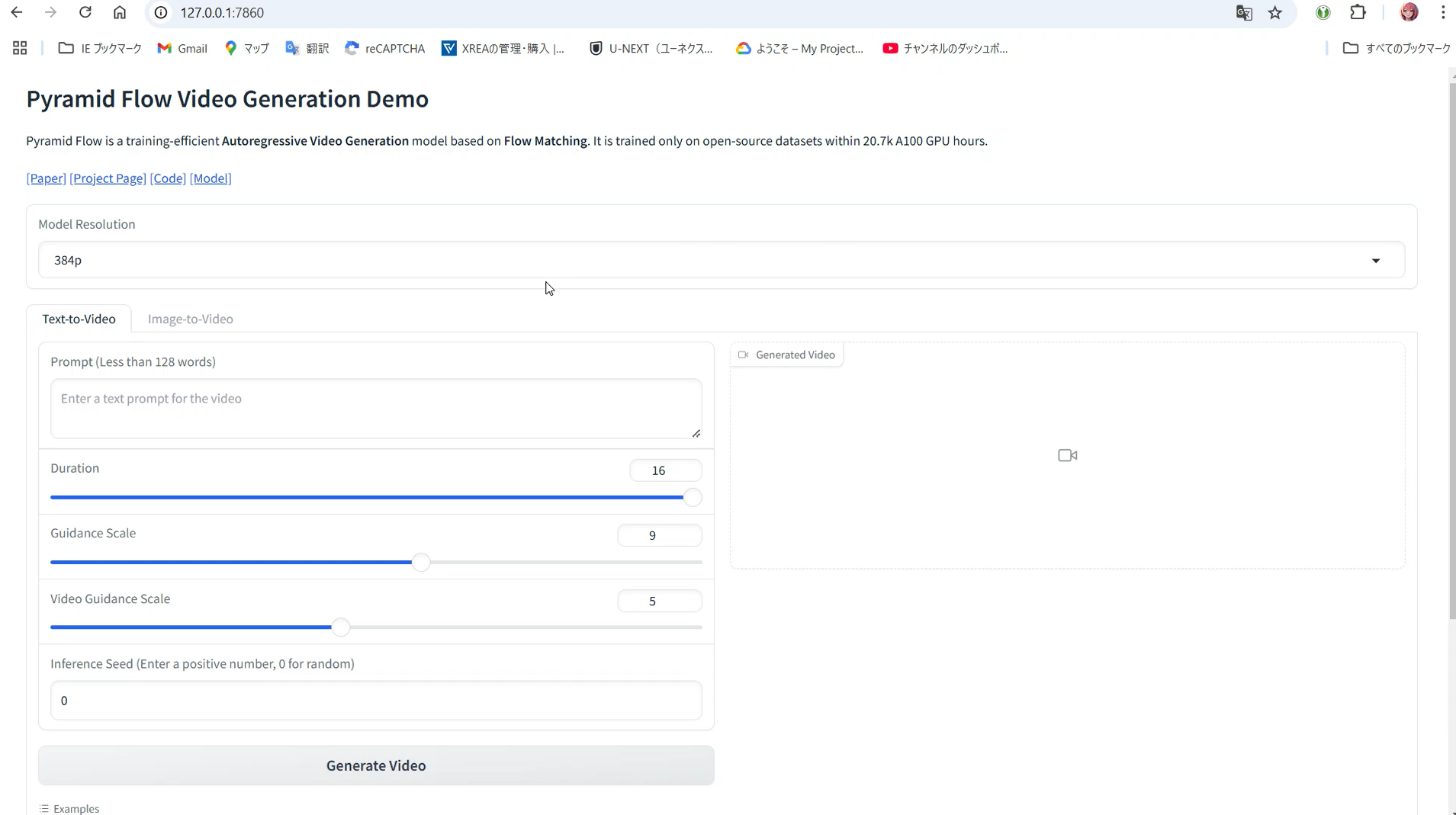

The Gradio Interface

Once launched, you’ll see a clean web interface with two main tabs:

✨ Text-to-Video Generation

Transform your written descriptions into videos:

Input Parameters:

- Prompt: Detailed description of your desired video

- Duration:

- 384p: Up to 31 frames (~1.3 seconds at 24fps)

- 768p: Up to 240 frames (10 seconds at 24fps)

- Guidance Scale: 7.0-9.0 recommended for 768p, 7.0 for 384p

- Video Guidance Scale: Controls motion intensity (4.0-5.0 typical)

- Resolution: 384p (faster) or 768p (higher quality)

Example Prompt:

“A movie trailer featuring the adventures of the 30 year old space man wearing a red wool knitted motorcycle helmet, blue sky, salt desert, cinematic style, shot on 35mm film, vivid colors”

🎨 Image-to-Video Generation

Animate your static images:

Input Parameters:

- Input Image: Upload a high-quality starting image

- Prompt: Describe the desired motion/animation

- Motion Guidance: Fine-tune animation intensity

- Other Settings: Similar to text-to-video mode

Example Prompt for Image Animation:

“FPV flying over the Great Wall”

Advanced Features & Optimization

Multi-GPU Inference

For users with multiple GPUs, Pyramid Flow supports sequence parallelism:

# For 2 GPUs

CUDA_VISIBLE_DEVICES=0,1 sh scripts/inference_multigpu.sh

# For 4 GPUs

CUDA_VISIBLE_DEVICES=0,1,2,3 sh scripts/inference_multigpu.sh

Performance Improvement:

- Single A100: 5.5 minutes for 5s/768p/24fps video

- 4x A100s: 2.5 minutes for the same video

CPU Offloading for Limited VRAM

Enable CPU offloading in your code to run on GPUs with less than 8GB VRAM:

model.enable_sequential_cpu_offload()

Apple Silicon Support

Pyramid Flow now includes experimental support for Apple Silicon (M1/M2/M3) Macs, though performance will be slower than NVIDIA GPUs.

Memory Management & Performance Tips

Understanding VRAM Usage

Monitor your GPU memory usage:

import torch

if torch.cuda.is_available():

print(f"GPU: {torch.cuda.get_device_name(0)}")

print(f"Total Memory: {torch.cuda.get_device_properties(0).total_memory / 1024**3:.1f}GB")

print(f"Allocated: {torch.cuda.memory_allocated() / 1024**3:.2f}GB")

print(f"Cached: {torch.cuda.memory_reserved() / 1024**3:.2f}GB")

Optimization Strategies

- Start with Lower Resolution

- Test prompts at 384p first

- Move to 768p for final renders

- Reduce Frame Count for Testing

- Use shorter durations during experimentation

- Increase to full length for final output

- Clear GPU Memory Between Generations

torch.cuda.empty_cache() - Use VAE Tiling for Large Videos

model.vae.enable_tiling()

Common Issues & Solutions

Python Version Mismatch

Issue: Python showing wrong version even after setting with pyenv

Solution:

- Close VSCode completely

- Restart VSCode

- Verify with

python --version - Check

.python-versionfile exists in project directory

CUDA Not Available

Issue: [WARNING] CUDA is not available. Proceeding without GPU.

Solution:

- Verify CUDA installation:

nvcc -V - Reinstall PyTorch with correct CUDA version

- Ensure NVIDIA drivers are up to date

Out of Memory Errors

Issue: CUDA out of memory errors during generation

Solutions:

- Enable CPU offloading

- Reduce resolution to 384p

- Decrease frame count

- Close other GPU applications

- Use multi-GPU setup if available

Model Download Issues

Issue: Slow or failed model downloads

Solutions:

- Use a stable internet connection

- Download models separately using Hugging Face CLI

- Consider using a download manager for large files

- Verify sufficient disk space (models are several GB)

Alternative Ways to Use Pyramid Flow

1. Google Colab

For those without local GPU access:

# Setup in Colab

!git clone https://github.com/jy0205/Pyramid-Flow

%cd Pyramid-Flow

!pip install -r requirements.txt

!pip install gradio

# Download models

from huggingface_hub import snapshot_download

model_path = '/content/Pyramid-Flow'

snapshot_download("rain1011/pyramid-flow-miniflux",

local_dir=model_path,

local_dir_use_symlinks=False,

repo_type='model')

# Start

!python app.py

2. Hugging Face Spaces

An official demo is available on Hugging Face Spaces, allowing you to test the model without any local setup.

3. Direct Python Script

For integration into existing projects:

import torch

from pyramid_dit import PyramidDiTForVideoGeneration

from diffusers.utils import export_to_video

# Initialize model

model_dtype, torch_dtype = 'bf16', torch.bfloat16

model = PyramidDiTForVideoGeneration(

'path/to/models',

model_name="pyramid_flux", # Use miniFLUX

model_dtype,

model_variant='diffusion_transformer_768p',

)

# Generate video

prompt = "Your creative prompt here"

with torch.no_grad(), torch.cuda.amp.autocast(enabled=True, dtype=torch_dtype):

frames = model.generate(prompt=prompt, num_frames=240) # 10 seconds

export_to_video(frames, "output.mp4", fps=24)

Latest Developments & Future Outlook

Recent Updates (as of 2025)

- MiniFLUX Architecture: Significant improvements in human anatomy and motion coherence

- Extended Generation: Support for up to 10-second videos at 768p

- Multi-GPU Optimization: Dramatically reduced generation times

- Training Code Release: Full training pipeline now available for researchers

- ICLR 2025 Acceptance: Academic recognition of the pyramidal flow matching technique

Comparison with Other Models

Pyramid Flow stands out in the crowded AI video generation space:

- vs OpenAI Sora: Fully open-source alternative with comparable quality

- vs Runway Gen-3: Free to use, though slightly slower generation

- vs Stable Video Diffusion: Better temporal consistency and longer videos

- vs CogVideoX: More efficient memory usage and better motion quality

Community & Resources

- GitHub Repository: github.com/jy0205/Pyramid-Flow

- Project Page: pyramid-flow.github.io

- Hugging Face Models:

- Technical Paper: Available on arXiv (arXiv:2410.05954)

Final Thoughts & Best Practices

After extensive testing with Pyramid Flow, here are my key recommendations:

For Best Results:

- Prompt Engineering: Be specific about style, lighting, camera movement

- Start Small: Test at 384p before committing to 768p renders

- Use MiniFLUX: The newer architecture produces better human figures

- Leverage GPU Features: Enable tiling and offloading for efficiency

- Iterate Quickly: Generate multiple variations with slight prompt changes

Creative Applications:

- Content Creation: YouTube intros, social media content

- Prototyping: Storyboards, concept visualization

- Education: Explanatory animations, historical recreations

- Art Projects: Experimental video art, music videos

- Marketing: Product demonstrations, promotional content

The field of AI video generation is evolving at breakneck speed. What seemed impossible just a year ago is now running on consumer hardware. With Pyramid Flow being open-source and continuously improved by the community, we’re witnessing the democratization of professional video creation tools.

Whether you’re working with an 8GB gaming GPU or a multi-GPU workstation, Pyramid Flow offers a pathway into the future of content creation. The key is to start experimenting now, understanding the technology’s capabilities and limitations, and finding creative ways to integrate it into your workflow.

Remember: The most impressive videos don’t always come from the most powerful hardware — they come from creative minds working cleverly within their constraints. Happy creating!

This guide is based on hands-on testing with Pyramid Flow as of 2025. For the latest updates and features, always refer to the official GitHub repository and documentation.